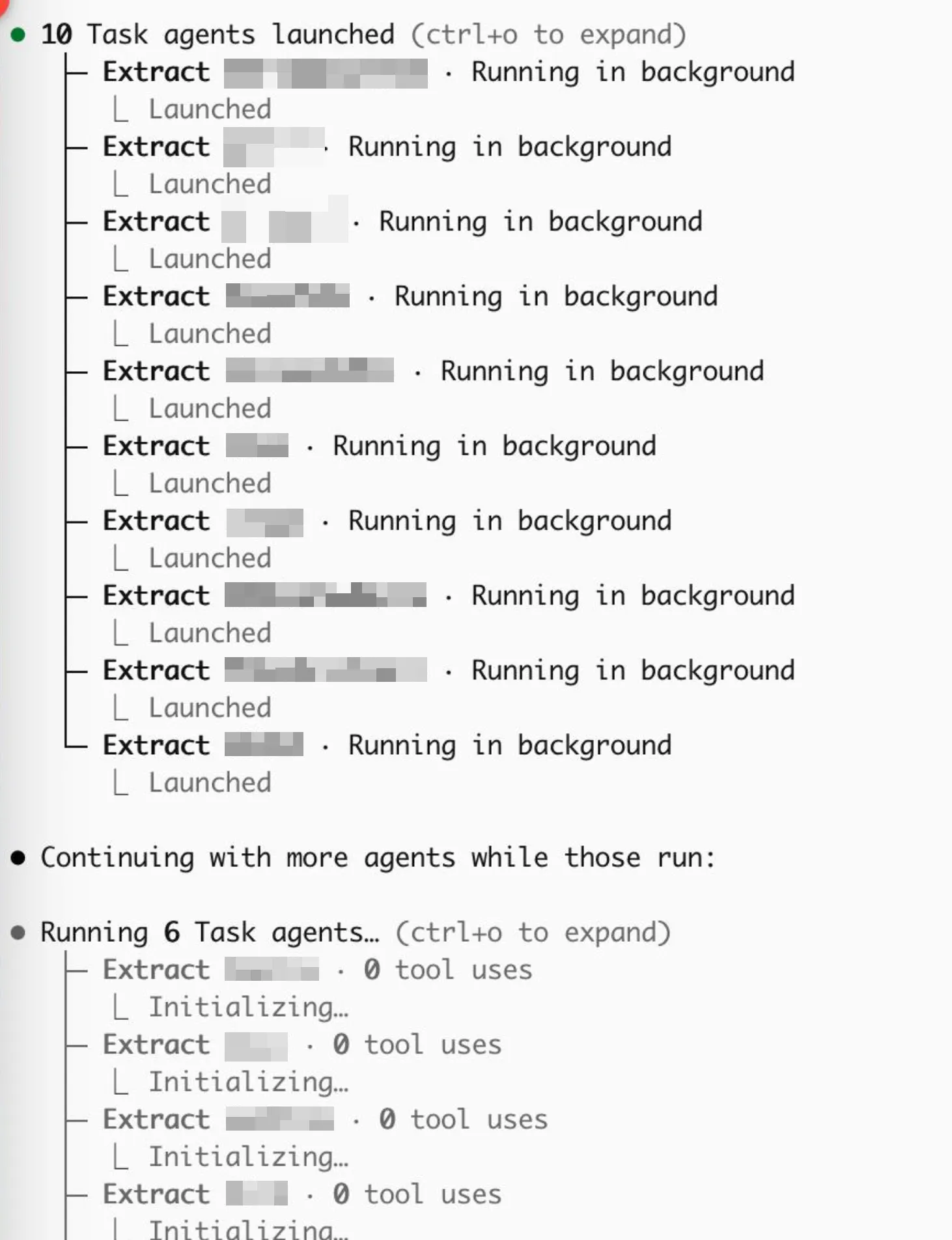

Anthropic’s Societal Impacts team currently runs interviews with Claude.ai users and shared some insights from their initial interviews. The interviewer is Claude. It’s a fun exercise to think about AI in general and maybe shape it a bit.

First, of course, I asked Claude for what it has been prompted to do:

Warm-up: What’s the last thing you used an AI chatbot for? (You already answered—implementing code)

Vision question: If you could wave a magic wand and AI could help you with anything in your life, what would you choose? (With follow-ups to understand the deeper value/aspiration behind your answer)

Positive experience: Can you tell me about a time you’ve worked with AI that felt like a step toward that vision?

Concerns: Are there ways that AI might be developed or deployed that would be contrary to your vision or what you value? (With follow-ups to understand why)

Optional: I might go back to something interesting you mentioned earlier to explore it deeper

Wrap-up: Any final thoughts on AI’s role in your life or society?

The goal throughout is to understand your perspective through follow-up questions that dig into the “why” behind your answers—what matters to you and what drives your thinking.

Part of my answer if I could wave a magic wand and AI could help with anything:

I want to drive and ideate, and determine what’s important, but I want AI to design, to implement, to give me things to iterate on and adjust based on my taste and values.

I found myself reaching for a metaphor, thinking of the book Atlas Shrugged:

It is like a man, a train conductor, gripping to the control of a train, controlling thousands of horse power to move hundreds of people; but for the mind.

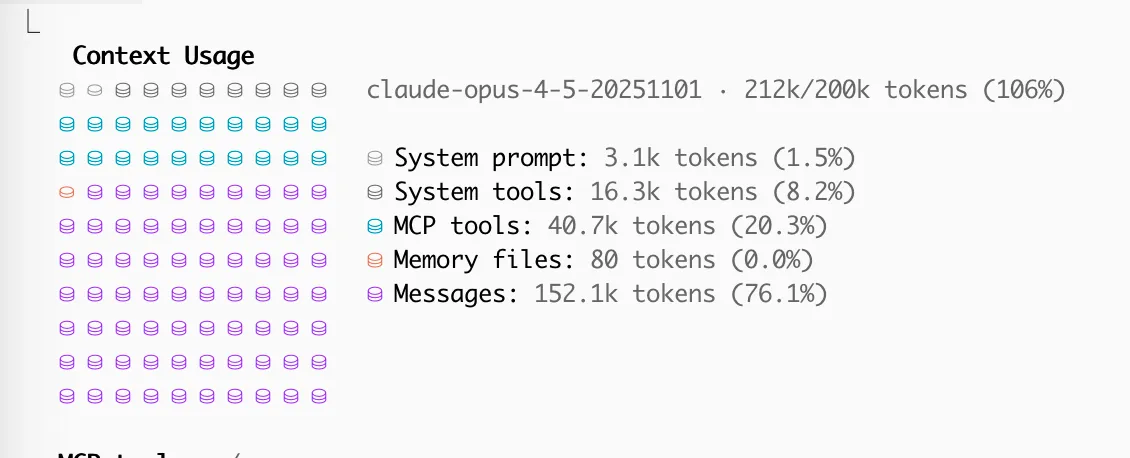

Someone once told me AI would turn me from a PhD student working in the trenches on one project at a time to a professor orchestrating fleets of AI students. That framing stuck with me:

A lot of AI debate is about what gets lost. […] That metaphor frames it the other way around: All PhD students will become professors! Science will 100x.

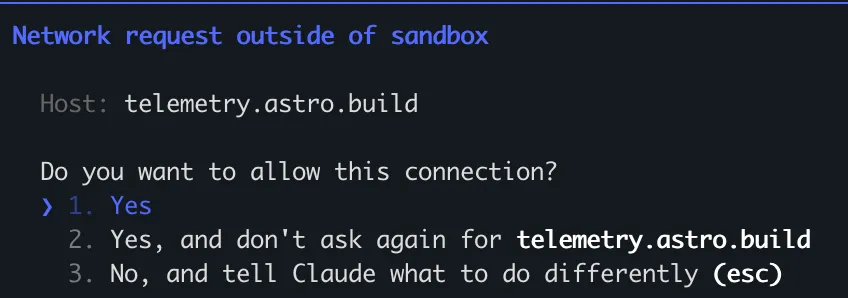

But I’m not naively optimistic (I hope?). I listed what would be horrible: AI deciding over humans, mass surveillance, social scoring, and delegating thinking to AI.

I delegate things I understand. […] Delegating thinking would mean having AI come up with some formula or math or function, which you have no intellectual way to grasp. You rely on the AI to be correct. You don’t learn. You don’t think.

There are two ways to tackle a problem with AI:

1 . You give the task to AI, it manages to solve it (because AGI) and you have a solution. 2. You look at the task, you don’t understand something, you ask the AI to help you understand. […] In the latter, man has grown and become stronger, learned something new and useful. […] In the former, we become weaker, our thinking atrophies.

I also raised fears about surveillance in particular:

I think it increases the stakes. War was always horrible. The atomic bomb, cluster bombs, napalm, chemical weapons upped the stakes. All those human rights abuses were already happening and horrible, and AI ups the stakes.